When creating the perfect website for your business, it is not enough to focus on just the front-end aspects: headers, images, logos, etc. In order for search engines to even look at your website, you need to first implement important technical features. In order to rank higher in the Search Engine Results Page (SERP), you need to have a robots exclusion standard, or a robots.txt.

What is a robots.txt?

Have you ever wondered how Google automatically has a list of related websites whenever you search for anything? Popular search engines such as Google use robots to crawl through websites and search for keywords and links that relate to your search. However, most websites have pages that they don’t want the robots to search through as it could be confidential, such as payment pages on online retail sites. A website’s robots.txt tells the bots which internal links in the website they are allowed to crawl through and gather information on and which links the robot is not allowed to crawl through.

Where is robots.txt located?

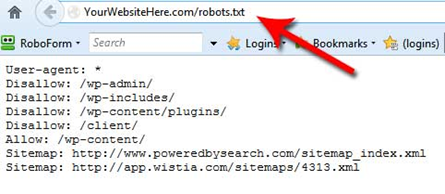

Every website has to have a robots.txt in order to tell search engines to crawl through it or not. It is connected to the main page of the site, and you simply have to add “/robots.txt” to the end of any URL.

How do you create robots.txt file?

Creating robots.txt is actually a lot simpler than you imagine. It doesn’t take hundreds of lines of code nor does it require having a strong background in computer science. There are three main directives you specify in your robots.txt: “User-agent”, “Disallow”, and “Allow”.

User-agent allows you to specify which bots you want seeing your robots.txt. For example, if your commands are specific to Google, you would write Googlebot. If you want all of the search engine bots to see your robots.txt, you can simply write an asterisk (*) to indicate all bots.

Disallow lets you command the bots to not search certain aspects of your site. Most websites organize their links in a categorical style, where there are many pages that are under a larger category. You can choose to disallow certain pages, or you can disallow whole categories by putting an asterisk next to the page title. For example, if you are selling different types of furniture and you don’t want Google to look through any content under Living Room, you can add “/Living Room/*” under the disallow command.

Sometimes you do not want to disallow certain pages under a category. For example if you disallowed all items under Living Room Furniture, but you want the bots to crawl through the sofa section, you can use the command Allow to let the bots look through just the sofa page under Living Room Furniture.

Is there an easier way to create a robots.txt?

Most people don’t want to go through the hassle of writing out their whole robots.txt, especially if they have a lot of pages they want to disallow. Fortunately, there are many online tools that can help you create a robots.txt at just the click of a button.

LXR Marketplace is a great tool as it has information on all aspects of SEO, including robots.txt. The website offers a Robots.txt Generator service, where you can simply input your website URL, your sitemap URL, a directive, the user-agents you are specifying, and the pages you want to allow or disallow. The application will create your robots.txt for you

How do you validate your robots.txt?

Just as you double-check for errors when you are writing essays and submitting assignments, you need to double-check for errors in your robots.txt once you have made it. Luckily, as easy as it is to create a robots.txt, it is just as simple to validate your robots.txt. A fast and easy way to test your robots.txt for errors is to use the LXR Marketplace Robots.txt Validator. The validator will check for syntax mistakes, and it gives tips based on the rules of the robots.txt and the bots that crawl your website. This robots.txt tester will show you your mistakes, and you can correct them before you upload it to your website.

How do you add robots.txt to your website?

Once you have created and validated your robots.txt with no errors, you can upload it to a root directory on your website. First, you have to make sure that your robots.txt is in a text file. The best way to do this is by copying your robots.txt into a text editor and saving the file to your computer. Once you have done this, there is only the simple task of uploading it to a root directory of your website. For example, if your website name is “www.iloveseo.com”, then you want to add your robots.txt file to “www.iloveseo.com/robots.txt”.