Login to continue

Don't have an account? Register here.

Continue With Email

By signing in, I agree to the

Privacy Policy and

Terms Of Service.

Change Password

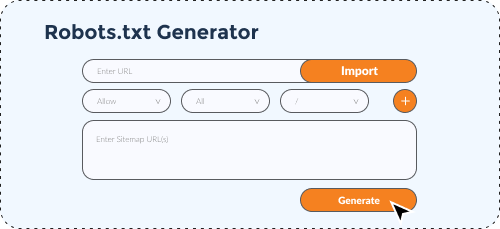

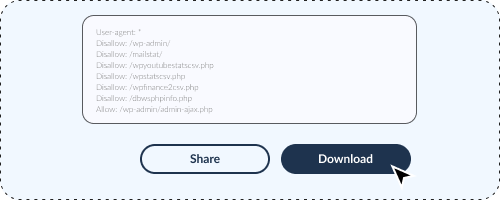

Robots.txt Generator

3.6

Analysis

Generated Robots.txtInstruct search engines robots on how to crawl. LEARN MORE

Get generated code:

Ask The Expert

Continue with Google

Continue with Google

Continue with LinkedIn

Continue with LinkedIn